AgentHands: Generating Interactive Hands Gestures for Spatially Grounded Agent Conversations in XR

AgentHands: Generating Interactive Hands Gestures for Spatially Grounded Agent Conversations in XR

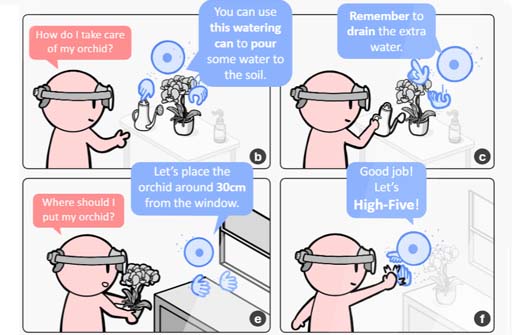

Communicating about spatial tasks via text or speech creates a mental mapping gap and limits an agent’s expressiveness. Inspired by co-speech gesture in face-to-face conversation, we propose offering AI agents hands to make responses clearer and more engaging. Guided by a taxonomy distilled from a formative study (N=10), we present AgentHands, an LLM-powered XR agent that augments conversational responses with synchronized, space-aware, and interactive hand gestures. Our contribution is a hand agent generation–execution pipeline: using a meta-instruction, AgentHands emits verbal responses embedded with GestureEvents aligned to specific words; each event specifies gesture type and parameters. At runtime, a parser converts events into time-stamped poses and motions, and an animation system renders an expressive pair of hands synchronized with speech. In a within-subjects study (N=12), AgentHands increased engagement and made spatially grounded conversations easier to follow than a speech-only baseline.